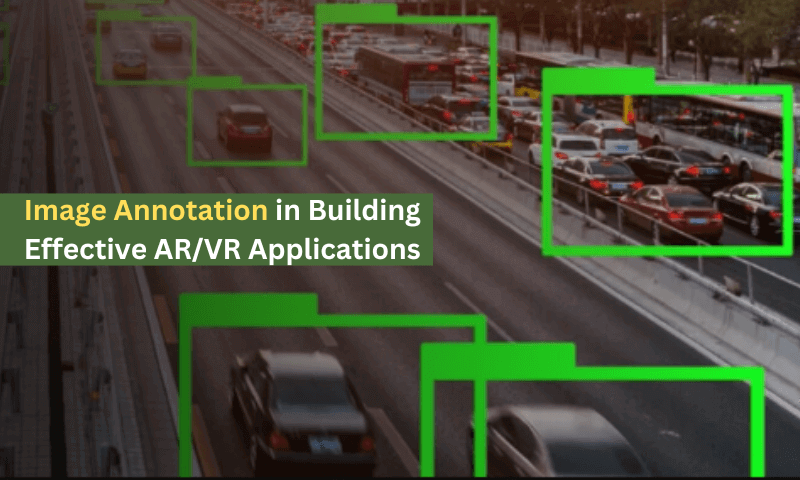

Role of Image Annotation in Building Effective AR/VR Applications

Consider an interior designing company that uses an Augmented Reality (AR) tool that lets its clients visualize potential furniture arrangements in their office spaces. With a simple scan through the smartphone, clients can see how different furniture pieces fit and complement their office ambiance, facilitating informed decision-making.

Such innovative solutions are made possible by AR and VR technologies. AR overlays digital information onto real-world objects to create a 3D experience that allows users to interact with both the physical and digital worlds. Virtual Reality (VR), on the other hand, immerses users in a simulated digital environment, replacing the real world entirely.

But have you ever wondered how such immersive experiences come to life? The answer lies behind the scenes, where a crucial element called ‘image annotation’ forms the backbone of many immersive technologies to provide users with these seamless experiences.

What is Image Annotation?

Image annotation is the process of adding descriptive labels or markers to images, serving as annotations, that assist computer vision models in understanding and interpreting visual data. Annotated datasets thus equip machine learning algorithms with essential information to recognize objects, patterns, and features within images, enhancing their accuracy and effectiveness in various applications. Through annotated datasets, machine learning models “see” and understand images in a similar way to how humans do, enabling a wide range of applications in fields such as autonomous vehicles, medical imaging, surveillance, and more.

Building Effective AR/VR Applications: Use of Image Annotation

1. Object recognition and tracking

Using annotated datasets, AR/VR systems accurately identify and track objects within the user’s environment to provide seamless and immersive experiences.

Image labeling techniques, such as bounding box annotation, are commonly used for object recognition and tracking. This method involves drawing precise rectangle boxes around objects of interest within images or video frames, providing crucial data for training machine learning models to recognize and track those objects in real-time.

In AR applications, object recognition and tracking technology use computer vision algorithms to enable virtual objects to interact seamlessly with the user’s surroundings. For instance, AR gaming applications can use object recognition to detect surfaces such as tables or floors, allowing virtual characters or objects to appear realistically within the environment and interact with physical objects. Similarly, in VR applications, object recognition and tracking enhance the sense of immersion by enabling virtual objects to react dynamically to the user’s movements and interactions. For instance, in VR training simulations, object recognition helps in tracking the user’s hands or tools, allowing for realistic interactions with virtual objects and environments.

Also Read: How to scale image annotation cost-effectively

2. Scene understanding

Annotated images provide essential context for AR/VR systems to understand the layout and composition of scenes. It helps the systems comprehensively analyze and interpret the user’s environment to enhance immersion and interaction.

In scene understanding, image annotation techniques such as semantic segmentation and instance segmentation play a vital role. Semantic segmentation involves labeling each pixel in an image with a corresponding class label, such as “road,” “building,” or “person.” This allows AR/VR applications to understand the layout and composition of the scene, enabling more accurate placement and interaction of virtual objects within the user’s environment. Instance segmentation, on the other hand, assigns semantic labels to pixels and also distinguishes between different instances of the same class. For instance, in a crowded street scene, instance segmentation can differentiate between multiple pedestrians, vehicles, and other objects, providing a finer level of detail for scene understanding.

This nuanced understanding facilitates dynamic interactions between virtual and physical objects, as virtual entities can respond intelligently to changes in the real environment.

3. Gesture recognition

The use of annotated datasets in building AR/VR applications helps in gesture recognition. Image annotation techniques, such as keypoint annotation and skeleton tracking, are commonly used in gesture recognition. Keypoint annotation involves labeling specific points on the human body, such as joints and fingertips, to track and recognize hand movements accurately. This provides crucial data for training machine learning models to interpret and respond to various gestures.

Skeleton tracking takes gesture recognition a step further by capturing the motion of the entire body, allowing for more dynamic and immersive interactions within AR/VR environments. By annotating key skeletal points, such as the head, shoulders, elbows, and hands, developers can create applications that accurately track the user’s movements in real time, enabling gestures to control virtual objects and interfaces seamlessly.

For instance, in a VR game that requires players to cast spells by making specific hand gestures, gesture recognition enables the system to detect and respond to the player’s movements accurately, providing a more engaging and interactive gaming experience.

Beyond entertainment, gesture recognition is useful in education, training, healthcare, and more. For example, in VR surgical simulations, gesture recognition can enable trainee surgeons to practice complex procedures using lifelike hand movements, providing a safe and realistic training environment.

4. Depth perception

With the help of annotated image datasets, AR/VR systems can accurately perceive spatial depth and distance. This ultimately allows users to assess and interact with virtual objects in three-dimensional space with a sense of realism and depth.

Image labeling techniques such as depth mapping and point cloud annotation are essential for accurately capturing and representing the spatial layout of the user’s environment. Depth mapping involves annotating images or video frames with depth information, typically represented as a grayscale image where darker areas indicate objects closer to the viewer, and lighter areas represent objects farther away.

Point cloud annotation takes depth perception to a higher level of detail by capturing the spatial coordinates of individual points within the scene. By annotating point clouds derived from depth-sensing technologies such as LiDAR (Light Detection and Ranging) or structured light sensors, developers can create highly accurate representations of the user’s environment, allowing for precise positioning and interaction of virtual objects.

Depth perception has potential uses in fields such as navigation, spatial mapping, and robotics. For instance, in AR navigation apps, depth perception can assist users in navigating complex environments by providing depth-aware route guidance and obstacle detection.

In Conclusion

Image annotation serves as the backbone of AR/VR technology. The development of AR/VR applications hinges on various crucial elements, driven by annotated datasets, for designing an immersive user experience.

However, as the process of building AR/VR applications in itself is complex and resource-intensive, it is quite challenging to find the right expertise and resources to handle the image annotation tasks effectively in-house. This is where outsourcing image annotation to third-party agencies comes into play. AR/VR developers can access a wealth of expertise and resources, including annotated datasets, annotation tools, and skilled annotators when they outsource image annotation services.

Moreover, it allows companies to focus on core development activities while offloading annotation tasks to professionals who can ensure accuracy, consistency, and scalability. Whether it’s annotating images for object recognition, segmenting scenes for depth perception, or labeling gestures for interaction, outsourcing offers a cost-effective and efficient solution for meeting the demands of AR/VR development.